📖 Introduction

Text-to-SQL, which enables natural language interaction with databases, serves as a pivotal method across diverse industries. With new, more powerful large language models (LLMs) emerging every few months, fine-tuning has become incredibly costly, labor-intensive, and error-prone. As an alternative, zero-shot Text-to-SQL, which leverages the growing knowledge and reasoning capabilities encoded in LLMs without task-specific fine-tuning, presents a promising and more challenging direction.

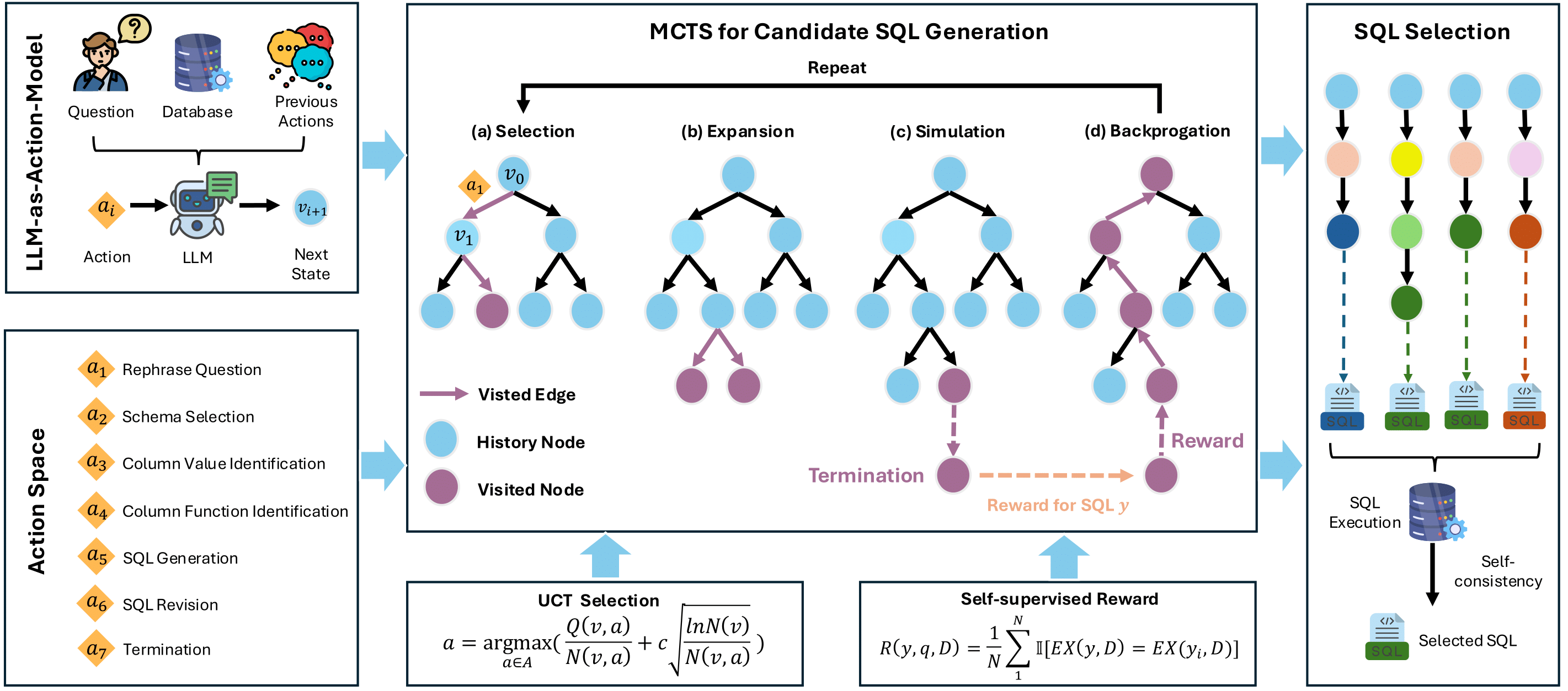

To address this challenge, we propose Alpha-SQL, a novel approach that leverages a Monte Carlo Tree Search (MCTS) framework to iteratively infer SQL construction actions based on partial SQL query states. To enhance the framework's reasoning capabilities, we introduce LLM-as-Action-Modelto dynamically generate SQL construction actions during the MCTS process, steering the search toward more promising SQL queries. Moreover, Alpha-SQL employs a self-supervised reward function to evaluate the quality of candidate SQL queries, ensuring more accurate and efficient query generation.

Experimental results show that Alpha-SQL achieves 69.7% execution accuracy on the BIRD development set, using a 32B open-source LLM without fine-tuning. Alpha-SQL outperforms the best previous zero-shot approach based on GPT-4o by 2.5% on the BIRD development set.

🛠️ Implementations

Alpha-SQL implements a novel framework that combines Monte Carlo Tree Search (MCTS) with Large Language Models (LLMs) for zero-shot Text-to-SQL generation. The implementation consists of three key components:

1. MCTS-based Search Framework: The system models SQL generation as a search problem, where each node represents a partial SQL query state and edges represent SQL construction actions. The MCTS process iteratively explores the search space to find optimal SQL queries through selection, expansion, simulation, and backpropagation phases.

2. LLM-as-Action-Model: To enhance reasoning capabilities, we introduce seven distinct reasoning actions:

- Question Rephrasing: Decomposes complex questions into structured formats

- Schema Selection: Identifies relevant database schema elements

- Column Value Identification: Extracts filtering conditions from questions

- Column Function Identification: Determines necessary SQL functions

- SQL Generation: Constructs SQL queries using a divide-and-conquer approach

- SQL Revision: Corrects invalid queries based on execution feedback

- Termination: Finalizes the SQL generation process

3. Self-Supervised Reward Function: The system employs a self-consistency based reward mechanism that evaluates SQL queries by comparing execution results across multiple sampled queries. This approach ensures reliable query generation without requiring annotated data.

Please refer to our paper for more detials.

🧪 Experiments

We conduct extensive experiments to evaluate Alpha-SQL's performance on both BIRD and Spider datasets. Our experiments demonstrate the effectiveness of our approach in zero-shot Text-to-SQL tasks.

Main Results

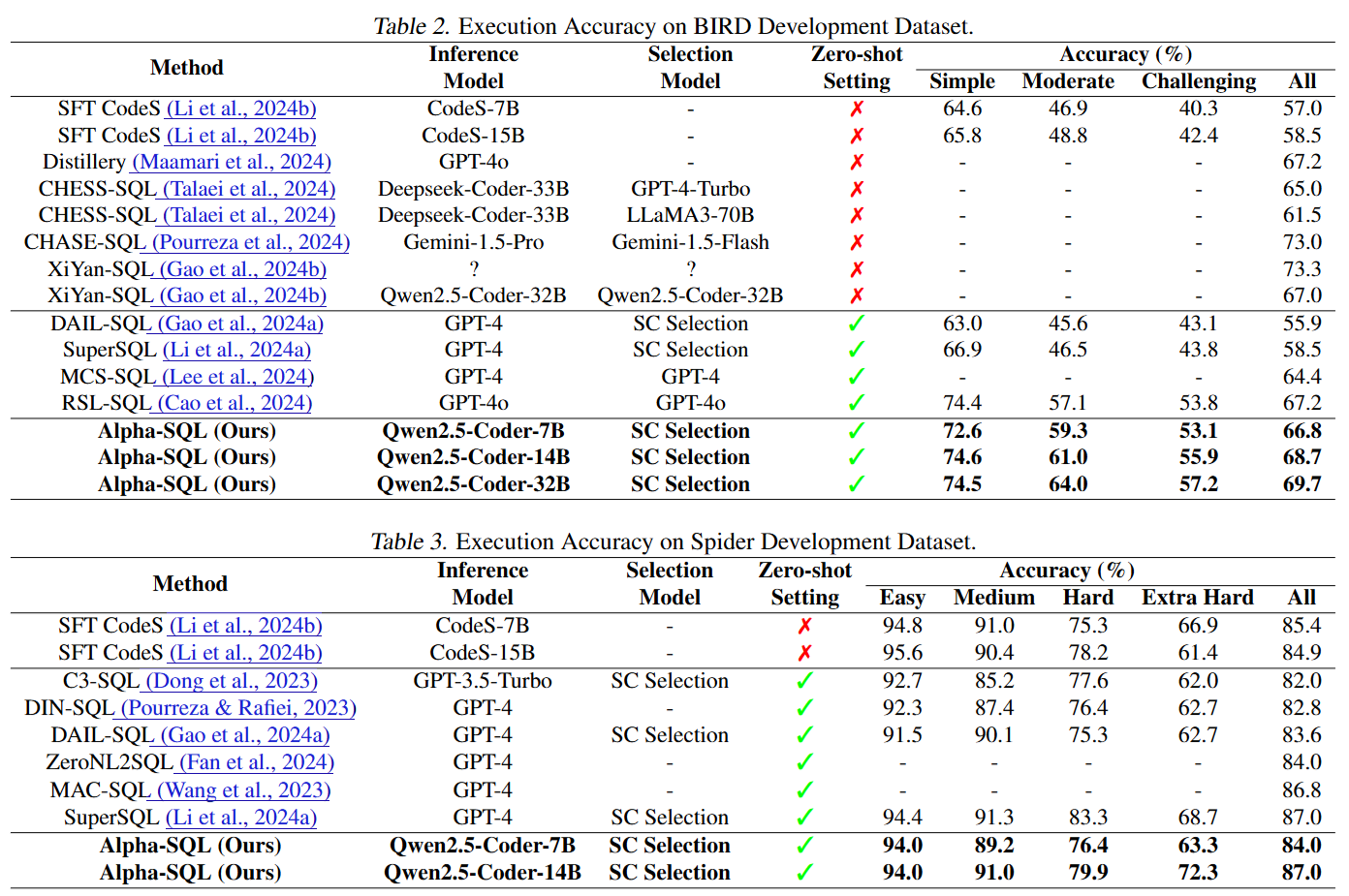

Performance on BIRD Dataset: Alpha-SQL achieves 69.7% execution accuracy on the BIRD development set using a 32B open-source LLM without fine-tuning. This performance:

- Outperforms the best previous zero-shot approach based on GPT-4o by 2.5%

- Surpasses many methods that require data fine-tuning

- Only trails behind methods that fine-tune proprietary models like Gemini-1.5-Flash

Performance on Spider Dataset: Alpha-SQL with Qwen2.5-Coder-14B outperforms existing methods, achieving a 2.1% improvement over SFT Coder-15B, which was specifically fine-tuned for the Spider dataset.

Impact of MCTS Rollouts

We investigate how the number of MCTS rollouts affects the performance of Alpha-SQL:

- As the number of rollouts increases from 1 to 24, the execution accuracy steadily improves

- The upper bound of performance (measured by Pass@N) shows a similar upward trend, up to 84% at Pass@24

- This demonstrates that our MCTS framework effectively explores the search space and finds better SQL queries with more rollouts

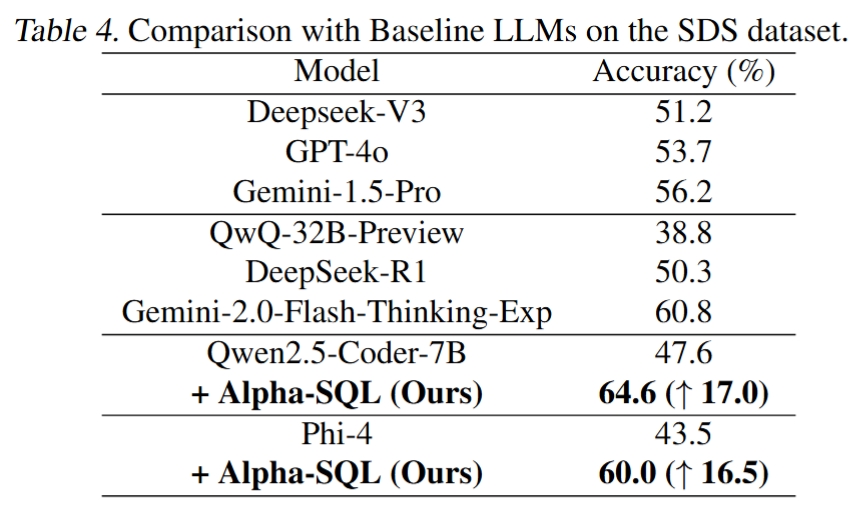

Comparison with Baseline LLMs

Our experiments show that Alpha-SQL, utilizing a model with only 7B parameters:

- Surpasses all baseline models in performance

- Outperforms sophisticated reasoning-optimized models like Gemini-2.0-Flash-Thinking-Exp

- Achieves significant accuracy improvements of 17.0% and 16.5% for Qwen2.5-Coder-7B and Phi-4 respectively

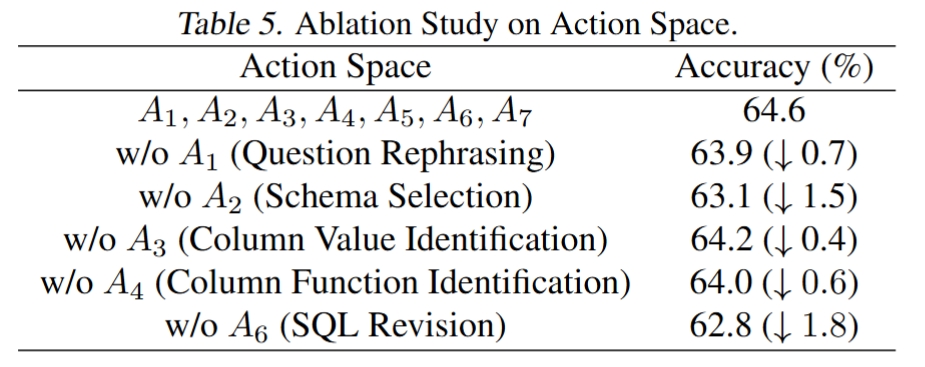

Ablation Studies

We conduct ablation studies to validate the effectiveness of our reasoning actions:

- Removing any action from the original action space negatively impacts performance

- The SQL Revision action shows particular significance, leveraging database interaction for query correction

- Performance improves with more MCTS rollouts, demonstrating the effectiveness of our search strategy

Please refer to our paper for more detailed experimental results and analysis.

✏️BibTeX Citation

@inproceedings{alpha-sql,

author = {Boyan Li and

Jiayi Zhang and

Ju Fan and

Yanwei Xu and

Chong Chen and

Nan Tang and

Yuyu Luo},

title = {Alpha-SQL: Zero-Shot Text-to-SQL using Monte Carlo Tree Search},

booktitle = {Forty-Second International Conference on Machine Learning, {ICML} 2025,

Vancouver, Canada, July 13-19, 2025},

publisher = {OpenReview.net},

year = {2025}

}